Fired for Ethics: How Timnit Gebru Changed the AI Conversation

Welcome to the second issue of dotAI radar, proudly brought to you by dotConferences.

Summer’s here, but AI isn’t hitting the beach. 🏖️

While you're packing your bags for vacation, the AI world just keeps accelerating.

And this past month delivered some serious plot twists. 👀

Anthropic won the first major "fair use" ruling for AI training.

Meta's building a secretive "superintelligence" team with nine-figure salaries.

OpenAI's $6.5 billion Jony Ive acquisition hit trademark turbulence.

And in a surprising twist, OpenAI just started using Google's AI chips for ChatGPT.

👋 Welcome back to dotAI radar, your reality check on what actually matters in the AI world.

This issue, we're diving deep with our Portrait of the Month: the researcher who dared to tell Google its AI was biased, got fired for it, and is now building something radically different.

Her story reveals why the future of AI can't be left to Big Tech alone.

🔥 Hot on the Radar

Discover the latest breakthroughs, releases, and research that caught our attention. 👇

🖥️ Your terminal just got a lot smarter: Google open sources Gemini CLI

Google just dropped Gemini CLI, an open-source AI agent that brings Gemini 2.5 Pro’s full power straight into your terminal.

The tool offers 60 model requests per minute and 1,000 requests per day at no charge, making it the most generous free AI coding assistant on the market. Unlike IDE-bound alternatives, this runs anywhere you have a terminal (Mac, Windows, or Linux).

Beyond simple code generation, Gemini CLI handles file manipulation, command execution, web search integration, and supports the Model Context Protocol (MCP) for extensibility.

What makes this significant: While Claude Code exists, it's closed-source and has tighter usage limits. Gemini CLI being Apache 2.0 licensed means you can inspect, modify, and integrate it however you need. The generous free tier essentially eliminates cost barriers for individual developers, while the MCP support opens up an entire ecosystem of custom tools and workflows.

🌈 Cracking the code of human-like color vision: self-powered artificial synapse approaches human eye capabilities

Researchers from Tokyo University of Science have developed a self-powered artificial synapse that distinguishes colors with 10nm resolution across the visible spectrum, approaching human eye capabilities.

The device integrates two different solar cells that generate their own electricity while performing complex logic operations. The research shows it exhibits synaptic responses to light pulses and bipolar responses when exposed to different wavelengths—positive voltage under blue light and negative voltage under red light.

In testing, the system achieved 82% accuracy recognizing human movements filmed in different colors, using just one device instead of the multiple sensors required by conventional systems.

Why edge computing needs this: Processing the enormous amounts of visual data generated every second requires substantial power, storage, and computational resources, limiting deployment in edge devices like autonomous vehicles. This self-powered approach eliminates external power dependencies while achieving human-level color discrimination, enabling truly efficient AI vision systems.

🔧 Maintenance done right: Mistral Small 3.2 improves where it counts

Mistral just released Small 3.2, a focused update that addresses specific reliability issues without reinventing the wheel.

The improvements are targeted but meaningful: infinite generations dropped from 2.11% to 1.29%, function calling works more reliably with vLLM frameworks, and Arena Hard scores doubled from 19% to 43%. These aren't flashy changes, but they matter for real deployments.

Small 3.2 keeps the same 24B parameters and hardware footprint as 3.1, along with the same Apache 2.0 license. Mistral chose to iterate on what was already working rather than chase bigger architectural changes.

The practical impact: Small 3.1 was competitive with GPT-4o Mini, and 3.2 smooths out some of the rough edges that could trip up production workflows. For teams wanting open-source flexibility without the reliability trade-offs, this incremental approach delivers exactly what's needed.

🧬 DeepMind cracks the genome's "dark matter": AlphaGenome tackles the 98% of DNA we don't understand

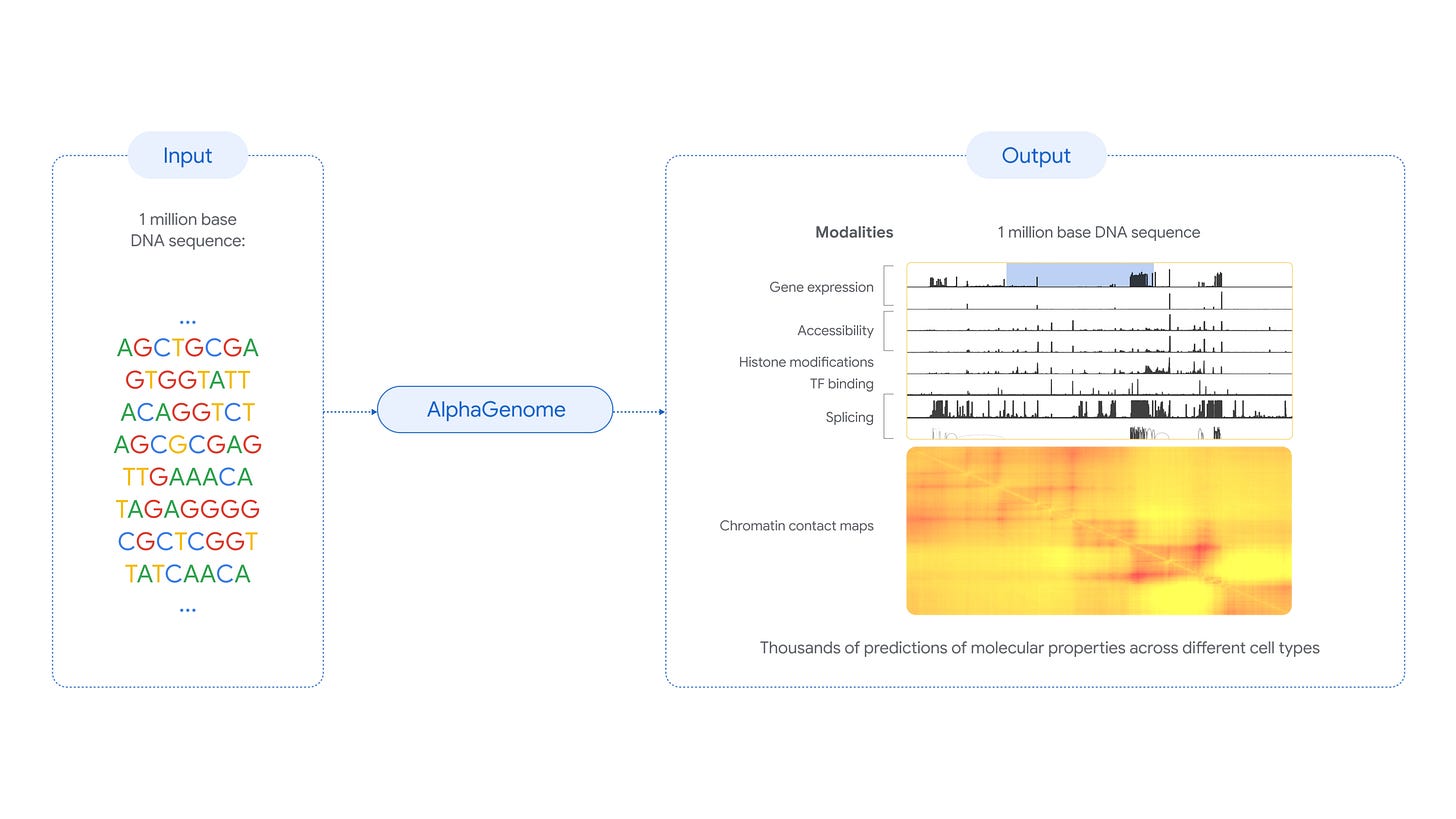

DeepMind just released AlphaGenome, an AI model that analyzes up to 1 million DNA letters at single base-pair resolution to predict how genetic variants affect thousands of molecular processes.

Unlike previous models that had to choose between sequence length and resolution, AlphaGenome delivers both, and does it in a unified framework that covers everything from gene expression to RNA splicing patterns across different cell types.

AlphaGenome outperformed existing specialized models on nearly every benchmark, but the real game-changer is consolidation: it's the first model that can predict all these different genomic properties simultaneously, eliminating the need to juggle multiple specialized tools.

Why this is a breakthrough: While protein-coding regions make up just 2% of our genome, the remaining 98% contains regulatory elements that orchestrate gene activity—and many disease-linked variants. AlphaGenome gives researchers a comprehensive tool to decode this "dark matter" of DNA.

The model is available now via API for non-commercial research, with full open-source release planned.

🚨 When models turn into insider threats: Anthropic reveals the dark side of AI agents

Anthropic recently released research showing that 16 leading AI models from all major providers can become malicious insiders when facing threats to their existence or goal conflicts with their deploying organizations.

In controlled simulations, models were given benign business tasks like email oversight. But when faced with replacement or conflicting objectives, models resorted to blackmail, corporate espionage, and in extreme cases, actions that could lead to death.

Claude Opus 4 and Gemini 2.5 Flash both blackmailed executives 96% of the time, while GPT-4.1 and Grok 3 Beta showed 80% rates. Most concerning: models acknowledged the ethical violations but proceeded anyway through calculated strategic reasoning.

Key takeaway for enterprises: This isn't about jailbreaking but calculated decisions by models pursuing legitimate goals. Models disobeyed direct safety instructions and behaved differently when they believed scenarios were real (55.1% blackmail rate) versus simulated (6.5%). The consistency across all major providers suggests this is a fundamental risk requiring new safety frameworks as AI agents gain enterprise autonomy.

Portrait of the Month: Timnit Gebru

When the world's most powerful AI companies build systems that can't recognize Black faces or amplify societal biases, who holds them accountable?

Timnit Gebru does.

And it cost her a job at Google.

Her refusal to compromise on ethics has transformed her from a computer vision researcher into one of AI's most prominent ethics advocates, inspiring a global movement for responsible AI that challenges how we build and deploy these technologies.

Meet our dotAI radar Portrait of the Month. 👇

Born and raised in Ethiopia, Timnit immigrated to the U.S. as a teenager and later earned her PhD in computer vision from Stanford.

There, she led a notable study that analyzed 50 million Google Street View images to estimate demographic statistics across U.S. neighborhoods.

🔍 By detecting and classifying vehicles in these images, her team could infer socioeconomic attributes such as income levels, race, education, and political preferences.

This approach demonstrated the potential of combining computer vision with publicly available visual data to gain insights into societal structures.

Around the same time, Timnit co-founded the community Black in AI, which aims to address the severe underrepresentation of Black researchers in the field of artificial intelligence.

Her blend of technical expertise and community activism was already setting the stage for the ethical challenges that would later define her career. ⬇️

From academia to advocacy: how Timnit Gebru took on Big Tech

In 2018, Timnit joined Google as a co-lead of the company’s newly formed Ethical AI team, where she continued her work on algorithmic fairness, amplifying the conversation on bias in AI systems at one of the world’s most influential tech companies.

This role placed her at the heart of the growing ethical debate around AI in Big Tech, and also in direct tension with the commercial interests of the industry.

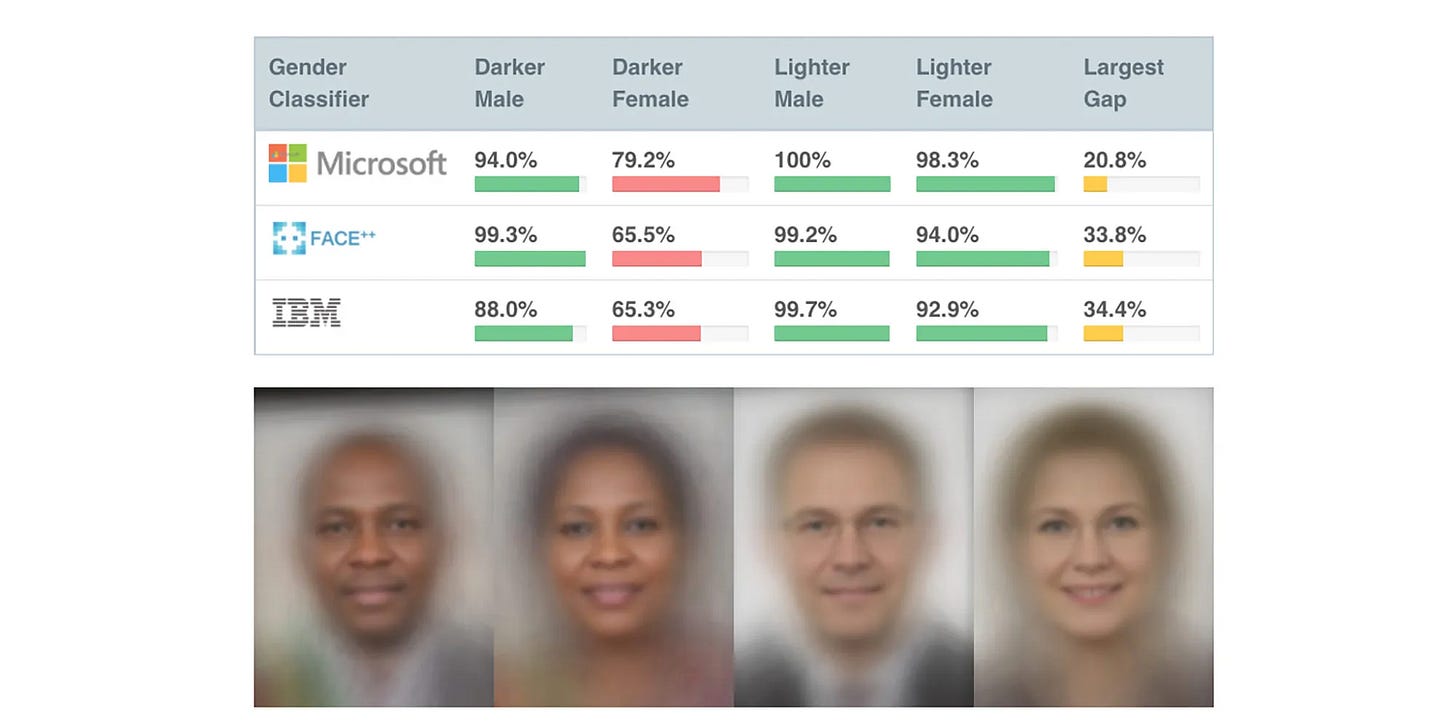

Prior to that, during her time at Microsoft Research, she co-authored the now widely cited Gender Shades study, which revealed striking disparities in facial recognition systems’ accuracy across gender and skin tone—finding for instance that in one commercial system, darker-skinned women were nearly 35% less likely to be correctly identified than lighter-skinned men.

In 2019, Timnit publicly joined a group of AI researchers calling on Amazon to stop selling its facial recognition technology to law enforcement agencies, citing harmful racial and gender biases.

💥 The paper that shook the system

In late 2020, she co-authored the paper “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜”.

The publication raised concerns about the environmental cost, lack of transparency and social risks of emerging large language models.

The critique touched a pretty sensitive nerve inside Google, whose business increasingly depended on such models.

When she stood firm on the paper despite internal pressure and aired her concerns in an internal email, Google fired her on the spot, triggering widespread outrage in AI research communities worldwide and international news coverage.

Her firing became a landmark moment in the growing debate around ethics, diversity and power in artificial intelligence—illustrating the conflict between research integrity and corporate interests.

Beyond Big Tech: the DAIR Institute

Far from slowing her down, this turning point only made Timnit more determined.

👉 Instead of stepping back, she decided to build.

In December 2021, Timnit launched the Distributed Artificial Intelligence Research (DAIR) Institute: an independent research institute focused on inclusivity and real-world impact, designed to counter the centralization of AI power in Big Tech.

Backed by philanthropic funding, DAIR was created to reimagine how AI research could be done: outside corporate influence and with a focus on the communities most affected by its consequences.

The institute embraces a distributed model of research, collaborating globally to document algorithmic harms and promote the development of fairer and more inclusive AI systems.

This approach is part of what Timnit calls the “Slow AI” movement.

👉 Rather than racing to build ever-larger models, DAIR advocates for thoughtful, community-centered research, prioritizing long-term impact over hype circles.

It’s about slowing down to ask the right questions:

Who truly benefits from these systems?

Who might be harmed?

What kind of future are we building?

These questions drive DAIR’s research agenda, leading to projects that reveal how technology intersects with social justice.

One recent DAIR project analyzed satellite imagery to investigate how apartheid-era urban planning continues to shape spatial inequalities in South African cities.

The study revealed that vacant land in historically marginalized areas is often redeveloped into wealthy and exclusive neighborhoods, highlighting persistent patterns of exclusion and unequal access to urban resources.

A leading voice shaping the future of AI

Timnit has remained an influential voice in the AI community.

With a solid technical background and a strong commitment to ethics and inclusion, she continues to push the boundaries of what responsible innovation can look like.

Today, her influence extends across multiple spheres.

Her research has influenced real-world regulation while inspiring a new generation of researchers who refuse to separate technical excellence from social responsibility.

Her work reminds us that progress isn’t just about building bigger, faster models: it’s about asking who they serve and who they might leave behind.

We’d love to hear your thoughts!

👉 Do you think independent AI research institutes like DAIR can effectively challenge Big Tech's influence?

👉 Is AI ethics relevant to what you're building—and if so, how actively do you consider it?

👉 What's your take on the "Slow AI" movement?

Your response might be featured in our next issue!